Memristor Chips: The Missing Link in Computer Memory Evolution

The world of computing has been dominated by three fundamental components for decades: resistors, capacitors, and inductors. But what if there was a fourth element that could revolutionize how our devices store and process information? Enter the memristor – a theoretical circuit element first proposed in 1971 that remained purely conceptual until researchers at HP Labs finally created a working prototype in 2008. Fifteen years later, memristors are finally emerging from research labs and beginning to find their way into commercial applications, promising to transform computing architecture as we know it. With properties that bridge the gap between processing and memory, these remarkable components could finally solve one of computing's most persistent bottlenecks.

What exactly is a memristor?

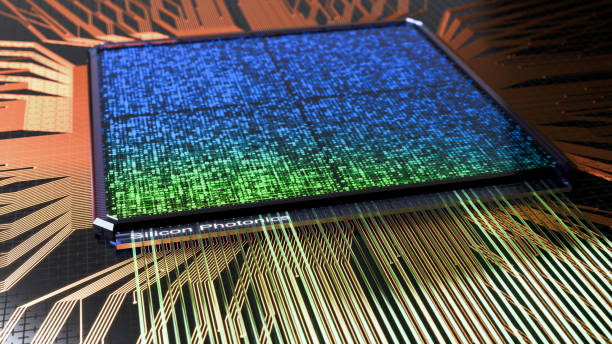

At its core, a memristor (memory resistor) is an electronic component that can “remember” how much current has flowed through it. Unlike traditional components, when power is cut to a memristor, it retains its last resistance state. This unique property allows memristors to function as non-volatile memory – storing data even when powered off – while also performing computational functions, effectively blurring the line between memory and processing.

The physics behind memristors involves the movement of ions within a thin film of material sandwiched between two electrodes. As current flows through the device, these ions shift position, changing the resistance of the material. This physical reconfiguration remains stable even without power, creating a persistent memory effect that’s both energy efficient and incredibly fast compared to conventional storage technologies.

What makes memristors particularly exciting is their analog nature – rather than being limited to binary states of 0 and 1, they can exist in multiple resistance states, making them ideal for neuromorphic computing systems that mimic the human brain’s neural networks.

Breaking the von Neumann bottleneck

Modern computers suffer from what engineers call the “von Neumann bottleneck” – the separation between storage (memory) and processing (CPU) that forces data to constantly shuttle back and forth between these components. This architecture, fundamental to computing since the 1940s, creates a traffic jam of information that limits performance and consumes significant power.

Memristors potentially solve this problem by enabling in-memory computing, where data processing happens directly within memory cells rather than shuttling information to and from a separate processor. This architectural shift could dramatically accelerate computational speeds while reducing power consumption.

Recent benchmarks from researchers at University of Michigan and several other institutions demonstrated memristor-based neural networks achieving 10-100x improvements in energy efficiency for certain AI tasks compared to conventional GPU implementations. For edge computing applications – where processing needs to happen on devices rather than in the cloud – this efficiency gain could be transformative.

From theoretical concept to commercial reality

The journey from theory to practical application hasn’t been smooth for memristor technology. After the 2008 breakthrough at HP Labs, many predicted rapid commercialization within a few years. Instead, challenges with manufacturing consistency, longevity, and integration with existing silicon processes slowed progress substantially.

Recent advances, however, indicate the technology is finally maturing. In 2020, startup Weebit Nano successfully demonstrated integration of their silicon oxide ReRAM (resistive RAM, a type of memristor) with standard CMOS manufacturing processes – a crucial step toward mass production. Meanwhile, SK Hynix has invested significantly in developing memristor-based storage class memory that could bridge the gap between DRAM and flash storage.

Most promising is Crossbar, a company dedicated to commercializing ReRAM technology, which recently announced partnerships with several major semiconductor manufacturers to integrate their memristor technology into commercial chips. Their implementation promises storage density approaching NAND flash while offering access speeds closer to DRAM – the holy grail of memory technology.

Applications beyond conventional computing

While faster, more efficient computing is an obvious application, memristors open doors to entirely new computing paradigms. Neuromorphic computing – building systems that mimic the brain’s neural structure – becomes much more practical with memristors, which can function similar to biological synapses.

For AI applications, this means the potential for vastly more efficient machine learning systems. Current deep learning models require enormous computational resources for training and inference, but memristor-based neural networks could dramatically reduce these requirements while potentially enabling new approaches to machine learning that more closely resemble biological cognition.

Beyond AI, memristors are finding applications in reconfigurable computing systems that can adapt their hardware configuration on the fly to match workload requirements, potentially replacing FPGAs (Field-Programmable Gate Arrays) with more efficient solutions.

Market outlook and challenges ahead

Industry analysts project the memristor market to reach approximately $4 billion by 2032, growing at a compound annual growth rate of over 30%. This growth is being driven by increasing demand for faster, more energy-efficient computing solutions for data centers, edge computing, and AI applications.

However, significant challenges remain before memristors achieve mainstream adoption. Manufacturing consistency continues to be problematic, with some memristor technologies showing uneven performance characteristics across large production batches. Reliability over time and after numerous read/write cycles also requires improvement before critical applications can fully rely on the technology.

Perhaps the biggest hurdle is integration with existing computing ecosystems. Software, firmware, and hardware standards have evolved around conventional memory hierarchies, meaning significant adaptation will be necessary to fully leverage memristor capabilities.

Despite these challenges, the potential benefits are too significant to ignore. As computing demands continue to increase exponentially while gains from traditional silicon scaling diminish, novel approaches like memristor-based computing may be essential to maintaining the pace of technological advancement we’ve come to expect. The next five years will likely determine whether memristors fulfill their promise as the long-missing fourth fundamental circuit element that transforms computing for decades to come.