Edge Computing's Secret Weapon: Fog Computing Bridges the Cloud Gap

The tech world is buzzing with talk about edge computing, but a lesser-known approach called fog computing is quietly transforming how our devices process information. While edge computing brings processing power closer to data sources, fog computing creates an intermediary layer that offers unique advantages in latency-critical applications. This distributed computing architecture extends cloud capabilities to the network edge, addressing challenges that traditional computing models struggle to overcome. As IoT devices multiply at an astonishing pace, fog computing positions itself as the crucial middleware that could reshape everything from smart cities to industrial automation.

The Architecture That Fills the Mist Between Edge and Cloud

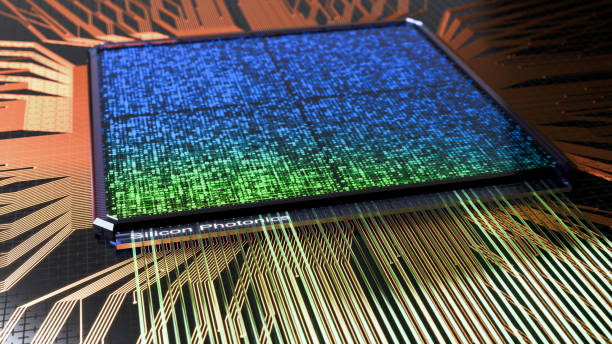

Fog computing represents a significant shift in how we process data across distributed networks. Unlike edge computing, which primarily focuses on moving computational resources directly to end devices, fog computing creates an intermediate processing layer between edge devices and traditional cloud data centers. This architecture distributes computing, storage, and networking services closer to where data originates but maintains a hierarchical structure connected to centralized systems.

The fog layer typically consists of fog nodes—specialized hardware ranging from smart routers and gateways to dedicated micro data centers—that form a mesh network across geographical areas. These nodes collaborate to execute tasks too complex for edge devices but too latency-sensitive to send to distant clouds. This architecture excels particularly in scenarios requiring real-time analytics, as it processes time-sensitive data locally while sending less urgent information to the cloud for deeper analysis and long-term storage.

Real-Time Decision Making Without the Wait

Latency reduction represents one of fog computing’s most compelling advantages. Traditional cloud computing models inevitably introduce delays as data travels to distant data centers for processing—often unacceptable for applications where milliseconds matter. Autonomous vehicles provide a perfect example: these self-driving systems generate approximately 4TB of data per day and must make split-second decisions to ensure safety.

Fog computing addresses this challenge by processing critical data locally, allowing autonomous vehicles to make immediate driving decisions while still leveraging cloud resources for mapping updates and fleet management. Similar benefits apply to industrial automation, where equipment failures must be detected instantly to prevent costly downtime. The manufacturing sector has reported latency improvements of up to 95% after implementing fog architectures, enabling predictive maintenance systems that respond to equipment anomalies before catastrophic failures occur.

Bandwidth Efficiency in an Increasingly Connected World

With the proliferation of IoT devices—projected to reach 75 billion globally by 2025—network bandwidth constraints represent a growing concern. The sheer volume of data these devices generate threatens to overwhelm traditional network infrastructure. A single smart factory can produce over 5TB of data daily, with only a fraction requiring comprehensive cloud analysis.

Fog computing offers a solution through intelligent data filtering and aggregation. By processing data closer to its source, fog nodes can extract relevant insights while discarding redundant information before transmission to the cloud. This approach dramatically reduces bandwidth consumption—some implementations report bandwidth savings of 30-40%—while still preserving analytical capabilities. Industries with remote operations like oil and gas, where connectivity may be limited and expensive, benefit particularly from this efficiency. Offshore platforms implementing fog computing have reduced satellite bandwidth requirements by up to 60% while maintaining operational visibility.

Security Reinvented for Distributed Systems

Security concerns have long haunted IoT implementations, with distributed devices creating expanded attack surfaces and potential vulnerabilities. Fog computing introduces a new security paradigm that addresses many of these challenges through distributed safeguards and localized threat detection.

By implementing security measures at fog nodes throughout the network, organizations can establish multiple layers of protection rather than relying solely on centralized cloud security. This approach allows for context-aware security policies that adapt to local conditions and threats. Additionally, fog nodes can implement anomaly detection algorithms that identify potential breaches faster by analyzing traffic patterns closer to endpoints.

The healthcare sector demonstrates these advantages clearly. Medical IoT devices handling sensitive patient data can utilize fog nodes to anonymize information before cloud transmission, ensuring regulatory compliance while still enabling analytics. Several major hospital systems have reported reducing security incidents by 40% after implementing fog computing architectures that localize threat detection and response.

Economic Viability: The Cost-Benefit Analysis

The economic case for fog computing becomes increasingly compelling as IoT deployments scale. While implementing fog architecture requires investment in fog nodes—typically ranging from $500 for basic gateways to $10,000 for sophisticated micro data centers—these costs are often offset by significant operational savings.

Reduced cloud service costs represent the most immediate benefit, as fog computing minimizes the amount of data that requires expensive cloud storage and processing. Organizations implementing comprehensive fog architectures report cloud service cost reductions of 30-50%. Energy efficiency improvements provide another economic advantage, particularly for battery-powered IoT deployments. By minimizing data transmission requirements, fog computing can extend device battery life by up to 40%, dramatically reducing maintenance costs in large-scale deployments.

Telecommunications companies have been early adopters, using fog computing to optimize their network operations. Major carriers have reported infrastructure savings exceeding $15 million annually through more efficient resource utilization enabled by fog computing’s distributed processing model.

The Road Ahead: From Fog to Harmonized Computing

As fog computing matures, integration with complementary technologies promises to further enhance its capabilities. The convergence of fog computing with 5G networks will enable ultra-reliable low-latency applications impossible with current infrastructure. Meanwhile, specialized AI accelerators optimized for fog nodes are beginning to emerge, allowing sophisticated machine learning algorithms to run efficiently at the network edge.

Industry standardization efforts, particularly through the OpenFog Consortium (now merged with the Industrial Internet Consortium), are addressing interoperability challenges that previously hindered widespread adoption. These standards will enable fog deployments to scale more efficiently across diverse hardware and software environments.

For organizations contemplating fog computing implementation, starting with targeted applications that demonstrate clear latency or bandwidth benefits provides the most straightforward path. As the ecosystem matures and deployment costs decrease, fog computing is positioned to become an essential component of the distributed computing landscape, bridging the gap between edge devices and the cloud while enabling entirely new classes of applications.